I was going to write about marketing metrics this week, but I thought I'd return to a topic, Conversational AI, for one more post before we move on to other topics.

Marketers are using a bunch of technologies today that benefit from AI. A/B Testing of Ads. Subject line variations on emails. Landing page headlines. All sorts of little helpers.

Last fall I was at a conference with a bunch of marketing types. Everyone was talking about NFTs. :Eyeroll: AI was also a hot topic. I still don't get NFTs (aside from a cool way of validating identity). But AI, that's something that I've applied in software for several years. It's the real deal.

The AI savants with all talking about Jasper. Jasper was a magical device that would create content automatically. Not only was it a boon for creating blog posts but it could be used for practically any content task. I've toyed with it. As a writer, I don't find its output particularly compelling. It's solidly satisfactory.

And now the mother of all content manufacturing engines has emerged. ChatGPT.

The Truth Problem

If you scroll through the ChatGPT Reddit you will find no shortage of examples of where ChatGPT is telling howlers. And it's no surprise. ChatGPT has no understanding of the truth or facts or any other similar concept.

ChatGPT only understands language. It can assess which words are normally near other words and then use that knowledge to answer questions. Those answers are always eloquent. Sometimes those answers—the connections the algorithm makes among words—are not correct. Something is represented as a fact when it's not. This is a problem if we're to rely upon these sorts of models to help us run our lives.

Can we solve the problem by only training ChatGPT on material that we know to be factually correct? Would that solve the problem? It would not. In the same manner, if we trained ChatGPT on content we knew to be incorrect we could still get correct answers. Again, the answers the tech creates are based on word patterns.

Could we reduce the likelihood that answers were either correct or incorrect with domains that are tightly controlled? Probably. I suspect that's why ChatGPT is getting better. I suspect they've figured out two things.

First, a way to use the feedback it gets from users, there's a little thumbs-up/thumbs-down function, to train the model on what answers are good and which aren't.

Second, they're using domain-specific models. If you ask a question that is related to history, the answer will be more influenced by documents that are about history. They may even have like Google already does, some ability to know which documents are more reliable than others. Better answers come from words that are more contextually aligned with your question.

I'm getting better

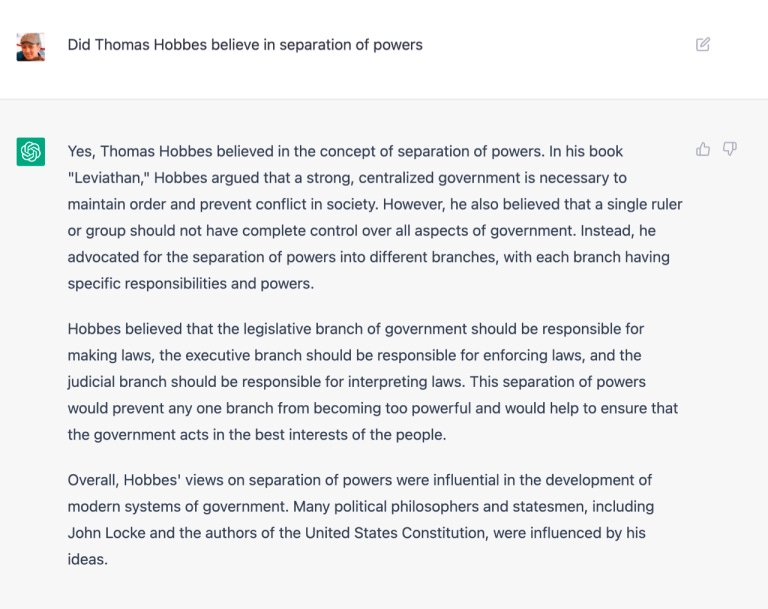

Way back when ChatGPT was just a child, in early December, it did many things wrong. My favorite example was one published by Ben Thompson in Stratechery. He was helping his daughter with her homework and put a question to ChatGPT.

As Ben points out in his post this is wrong. Hobbes did not believe in the separation of powers. He was a Monarchist. This belief is hinted at in the title of his book. "Leviathan". No separation of powers. A strong sovereign controls everything.

Yesterday, I gave the same question to ChatGPT.

This is an answer that aligns with the facts as they are generally accepted. It is more "truthful".

Did ChatGPT suddenly become enlightened? No. Not in the sense that it realized that the previous answer was wrong. It has no concept of right or wrong, truth or lies. The system was changed to interpret the language differently. From our perspective, it was "better".

What is truth?

My buddy, Dave asked today, "What is truth?". Dave can ask these "What is the sound of one hand clapping?" sort of questions. It's thought-provoking. How do we know something is truthful?

When we use Google we don't get the truth. What we get is an algorithm that Google has honed over decades to recognize content that people think is the best answer to a question. Humans have given the algorithm feedback (we call this training the algorithm) for so long that it's pretty good at giving us good answers.

We still do a fair amount of the lifting to discern the quality of Google answers. That's why we click on multiple links on the search results page. In a sense, we're evaluating the answers, reading the evidence, and deciding whether the answer is satisfactory.

Is Google truthful? How do we know that when we get a search result that it's a good answer? We have the experience that demonstrates that Google is reliable in this way. We don't get howlers from Google because it shows us content from reliable sources that we're able to personally assess. Sources. It doesn't make stuff up, it sources information.

Google has trained us to expect good things from technologies that answer questions, even questions that are in the form of keywords, which is perhaps why ChatGPT is so disappointing. Google has set the bar high for correct answers.

Trust but verify

It doesn't matter whether ChatGPT or any other generative technology, is truthful or not. It matters whether or not you can trust it. Google has earned that trust by showing its work for decades and allowing us to read for ourselves the source documents. You never have to wonder where it got an answer.

ChatGPT does not show its work. And since we've seen it stumble you have to check its work. Not just for the answers that seem fishy, but for every answer. ChatGPT is so eloquent you never know when something is factually correct or not. Was the answer eloquent bullshit or just eloquent? You have to Google the ChatGPT answer to make sure it's solid.

Take the two examples above. If I hadn't told you which was more correct, would you have known? And there's no way for you to interrogate ChatGPT to see how it came up with the answer.

A better way

I'm not a software engineer steeped in the ins and outs of language and reasoning technologies. I do spend a fair amount of time hanging out with folks who do have these skills. Here are what I suspect are the characteristics of a system that could combine the best of what ChatGPT and Google accomplish. Consider it a source of "fluent, trustworthy answers".

Natural Language Understanding--a system that understands the meaning of words and content in context. These algorithms break down language into a specific structure that allows a system to interrogate documents and libraries of documents in a very specific manner. You'll hear terms like ontolgoy, semantics, intent and entity recognition.

Logical Reasoning--this is the area where a lot of research and product development is underway. Companies like Elemental Cognition are working on it. This technology identifies both supporting and refuting evidence within relevant documents to help people solve problems and make better more informed decisions in a way that can be evidenced, understood and trusted.

Natural Language Generation--The last step of the equation is to provide the answers and evidence in a manner that makes sense to humans. Google is fine, but we had to be trained on how to use it. If you asked a consultant a question and they spit back a bunch of documents that could be the answer, you wouldn't be very satisfied with that consultant's answer. You'd much rather have a nicely written summary with bullet points and visuals. That's the magic of ChatGPT. It's what captures the imagination. AI technologies need more of this magical user experience. Unfortunately, software engineers usually give us ugly dashboards.

Certainly easier said than done.

I suspect Microsoft's investment in ChatGPT is going to find a better way. They already have a search technology in Bing that understands content in a way that ChatGPT never will. If they can figure out the reasoning part we may yet get the conversational technology that we need. One that understands content and our questions and interacts with us in a very natural manner.

Finally, lest you think Jasper's popularity is because it's any more reliable from a fact perspective, below is the same question I posed to ChatGPT.